Multi-Label Text Classification

Topic classifier for Jewish texts

Check out the GitHub repo here.

Application:

- Must assign topics to 1 million unlabeled passages.

- Given 100k labeled examples.

Motivation:

- Research passages that fall under one topic.

- Given one passage, find others related to it by topic.

Priorities:

- High precision, medium recall.

- Important for usage. A user would prefer to receive no topic label for his passage at all, rather than an incorrect label.

Modules:

1. Preprocessing

- Cleaning — remove duplicates, empty rows; keep only words, no punc or html chars.

- NLP — remove prefixes from Hebrew; more tools for English, e.g. stemming, remove stop words.

2. Vectorize

- Convert to numbers — one row for every passage. One column for every word in the vocabulary.

- Used TFIDF to avoid the influence of common words that appear commonly throughout all documents.

3. Classify using Hierarchy

- Scheme: Shrink the playing field down from 4000 to 25, then subsequently 100 or 200 subtopics per TOC topic.

- Example: David battles Goliath. Stage I — which topics match from the TOC. E.g. People and stories, but not laws nor foods. Stage II — which child topics under those broad categories. E.g. history of Israel, not history of Bavel. Under people, leaders and kings, not women nor patriachs.

Algorithms

Multinomial Naive Bayes

- How it works:

- The probability that a text passage should belong to a certain topic is equal to the probability of that text given the topic times the probability of that topic.

- It assumes that all features are mutually independent. This is often false, yet the algorithm often performs well nevertheless.

- [projected likelihood of a sentence belonging to a certain topic] = [overall probability of that topic] x [product of the likelihoods of each word in the sentence given that topic].

2. Why it is good:

- Specific vocabulary words will be associated with particular topics.

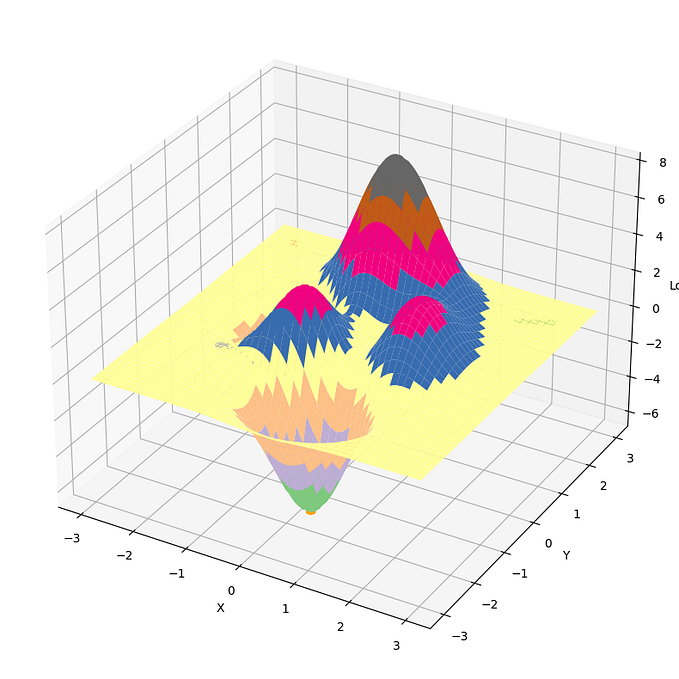

Support vector Classification — Linear

- How it works

- In the high dimensional space of all data points, topic labels divide the samples into disparate groups.

- Finding the boundary which best cuts between them.

- That is, it’s farthest from the members of the both groups on opposing sides.

- This enables the algorithm to decide how to classify new unseen samples from the test set. I.e. which side of the boundaries are they located.

- Key algo feature: When you add a dimension, that can enable a better divide between the groups.

- E.g. two groups may not be separable in two dimensions, but is when you add a 3rd dimension.

2. Why it is good:

- There is a high dimensinality, thanks to the large number of words.

- So we expect the groups to separate from each other.

Classification

Binary Relevance

- Series of 4000 binary classifiers.

- Advantage = Efficient computationally, just yes/no for each topic.

- Disadvantage = Doesn’t reflect interdepencdy of topics on each other.

Label powerset

- Transforms the problem from multi-label to multi-class.

- Use all unique label combinations found in training data.

- Disadvantages: This can run slow, because there can be very many combinations of different topics. If the test set has some label combination that isn’t in the train set, some topics will be missed.

Classifier chain

- Tries to be more efficient, unlike Label Power Set; yet still learn the association of different topics that often appear together, unlike binary relevance.

My Choice: I chose linear svc and binary relevance, because not only were they more accurate, but also they were was faster than the others. But theoretically tuning the parameters could yield better results from those other options.

Tools, tech, platforms

- Pandas for dfs,

- Nltk for text preprocessing.

- Scikit-Learn, Scikit-MultiLearn for vectorization, training and classification algorithms.

- MatPlotLib and Seaborn for visualzation.

- IDE vscode, kubernetes remote machine, because of the large size of the database — it would be too large for my computer.

Personal contribution

I was given the idea to do this project; and the topics ontology, that is a knowledge graph connecting all topics with their parent nodes etc.

What I did was construct the algorithm from the ground up.

Based on researching published papers on hierarchical classification, and documentation of the specialized tools like Scikit Multi-learn and deciding between their algorithms.

Challenges in the project; and how I responded…

- Too many topics: For starters just a few commonly occurring topics.

- Too many passages: Took a sample that was quick to run, but still showed statistical significance in the classification results (My experiments showed this to be about about 40k).

- Class imbalance: Some topics had many more passages than others. I just ignored topics that didn’t have a threshold number of passages; I found that having 100 was sufficient. If I had more time, I could could under-sample the more popular topics so as to balance the populations.

- I noticed that some topics were classifying reasonably well, but others were failing completely. I checked where the order of topics in the df was being determined. It was not consistent. One place it was ordered alphabetically. But another place it was ordered by popularity — how many passages belong to that topic. This took a keen eye to discover, but was straightforward to fix.

Number of people in project:

I worked alone. My manager was available when i had questions, but the approach was — as I think is best for efficiency and my growth — to work out everything I can by myself, and only ask for help when i hit a brick wall that i believe someone else could solve quickly.

Amount of time taken for the project —

- I was given ten weeks. I finished early. I think due to starting simply, and constantly testing and evaluating, before building up to larger scale and more complex algorithm.

- And then started to make the model more sophisticated. The results were very revealing, so I was given more time to progress further.

Improvements

- Optimize the choice of table of contents, e.g. to balance the paying field. I did this with laws which had many more topics than others.

- Implement rule based logic, esp to increase recall.

- Capture semantic content

— 1. Lemmatization for english, not just stemming.

— 2. Word embeddings — connect similar terms. rather than just listing common occurrences of words.

- Grid search for best hyperparameters in training.

— 1. N-grams = 2 and 3 were better than 1.

— 2. Learning rate

— 3. Batch size

Drawbacks

- Remote environment often crashed.

- Solution: Improvise! Used Colab and local machine on smaller version of data set.