Attention Mechanism

A Glimpse at How NLP Uses Attention

The attention mechanism is a type of neural network architecture used in natural language processing (NLP) to interpret and understand language. This mechanism is based on the concept of “attention” and is designed to help machines “pay attention” to certain aspects of a sentence or document that would otherwise be overlooked.

Attention is a fundamental concept in machine learning, and specifically in NLP. It is used to give the machine a better understanding of the text by assigning importance to certain words and phrases. This is done by assigning weights to each word and phrase based on their relevance to the context.

The attention mechanism is widely used in many applications of NLP, including machine translation, summarization, question answering, and text classification. Let’s take a look at how the attention mechanism works and why it is so important for machine understanding of language.

What Does Attention Do?

The attention mechanism helps the machine understand the meaning of words and phrases more effectively. It does this by focusing on certain aspects of the text and ignoring others. For example, when interpreting a sentence, the attention mechanism might pay more attention to the nouns than the adjectives. This helps the machine better interpret the sentence, because it can focus on the main ideas rather than getting bogged down by less important details.

In addition to helping the machine understand the text, the attention mechanism also helps to improve its accuracy. By assigning importance to certain words and phrases, the machine can learn to recognize them more easily. This is especially useful for machine translation, where the accuracy of the output depends largely on the ability of the machine to correctly interpret the text.

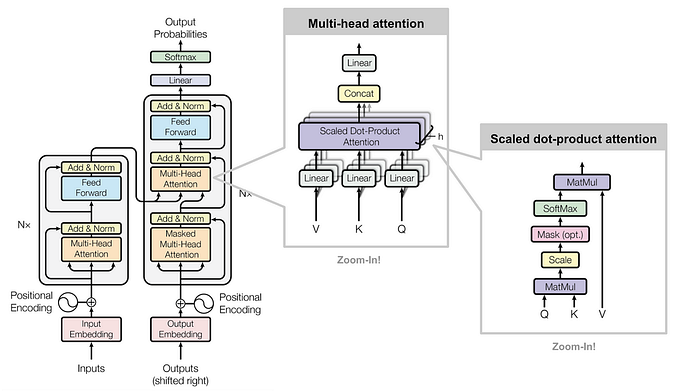

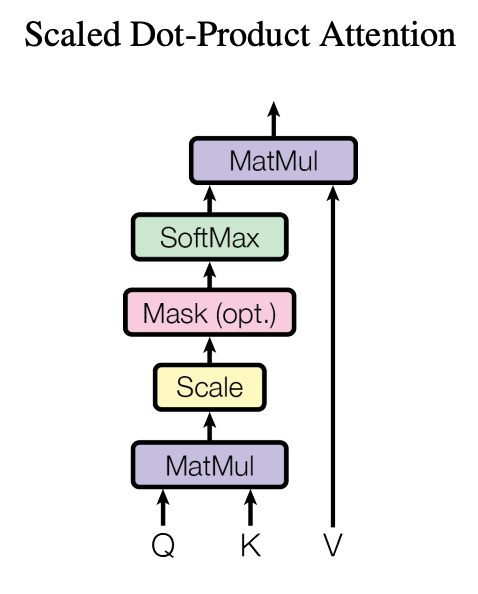

How Does Attention Work?

The attention mechanism works by assigning weights to words and phrases in a sentence or document. The weights are determined based on the relevance of each word or phrase to the overall context. This helps the machine to better understand the text by focusing on what is most important and ignoring what is not.

For example, in a sentence about a cat, the attention mechanism might assign a higher weight to the word “cat” than to the words “fluffy” or “orange”. This helps the machine to interpret the sentence more accurately and effectively.

What Are the Benefits of Attention?

We have discussed that attention focuses on certain aspects of the data, allowing for higher accuracy and more efficient learning processes. Attention mechanisms can be used to learn more about the data and its patterns, helping the model to more accurately make predictions. Some benefits of attention include:

- Improved accuracy — Attention models are able to focus more closely on certain aspects of the data, allowing them to more accurately identify patterns and make better predictions.

- Improved efficiency — Attention models are more efficient than some traditional methods, as they are able to use fewer parameters while still achieving better results. This can lead to faster training times and more accurate models.

- Reduced overfitting — By allowing the model to focus on specific aspects of the data, attention can help reduce the chances of overfitting. This is because the model is not considering all aspects of the data at once, but only focusing on the most relevant ones.

I hope you enjoyed learning from this article. If you want to be notified of the next articles that are published, you can subscribe. If you want to share your thoughts with me and others about the content or to offer an opinion of your own, you can leave the comment.